2021 Finals Review

A few weekends ago marked the end of 7th annual CPTC season. CPTC is now an international competition with over 47 teams competing in the regional events and 15 teams competing in the final event. Our final event this year was a hybrid event, taking place both in-person at the new RIT Cyber Security Center and simultaneously online. I chose to participate online (as did several other volunteers and student team members), just to play it safe with the recent COVID-19 Omicron surge. That said, many people participated in person, and followed strict pandemic protocol, being fully vaccinated, wearing masks, and properly distancing throughout the competition. In the end, we struck a nice balance of both in-person and accommodating for remote. Further, I haven't heard any stories about people getting sick after the competition, so I think we pulled it off without any incidents. Now lets focus on the competition. This year, the winning teams were as follows:

1st place: @calpolypomona

2nd place: @Stanford

3rd place: @tennesseetech

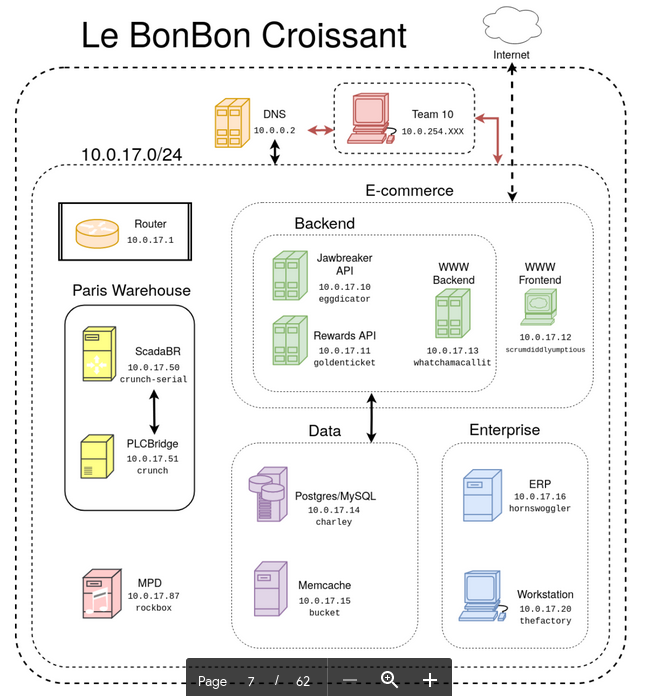

We also have our hall of fame moving forward, where we will document the winners from each year. This is a nice improvement as it creates a unified place to both see our themes and top teams from each region and finals each year. We also have our blog, which will include a number of breakdowns and advice articles for succeeding in CPTC throughout the year. Our theme for the competition this year was the bakery and candy manufacture La BonBon Croissant, or LBC for short. This was a very fun network, although almost entirely Linux it included a number of easter-eggs and unique elements, such as our music server and PLCs for the warehouse. There were also a number of back-end APIs and our active rewards system.

This year the environment included several PLCs in an emulated factory environment. Not only could students interact with the devices on the network, but these were all emulated and visualized through an interactive warehouse application. This year we tried to set the students up for success, rather than let them fall into the traps (such as last year with the Dam), by asking them for a PLC testing plan before we included the fragile systems in the scope of the pentest. Despite the plans, many teams still overwhelmed and knocked over the PLCs. This was partially by design as the systems had very low resources, so overwhelming them was fairly easy. Regardless, the competition always sees some cool innovations along with common mistakes. We are seeing a lot more of the kill chain diagrams in the team reports, describing how the threat exploited and moved throughout the network. I really like this perspective as it quickly shows what are the key vulnerable elements that let them penetrate further into the network. We also saw the custom tool of jVision return, a web tool used by the Cal Poly students to cooperatively label and work through network scans together. I'm a big fan of these cooperative tools, which allow the students to do analysis work together and share notes through annotation. I have an entire presentation on this type of tooling, as well as talking about it a bunch in my book.

This year Stuart ran the OSINT and World team, while I refocused to the overall Scoring of the game. Stuart, Rachel, Lucas, and I brainstormed for awhile on how to score the interactions within the game. Ultimately we wanted to weight the interactions, injects, and presentations heavier this year, as we felt these elements helped maintain the client relationship more than just a report in many ways. We arrived at a method for averaging interactions together, such that more interactions doesn't create a generally higher score but can still help recover from a negative event. Overall, the OSINT and World content was excellent this year, and we saw many creative solutions for analyzing it, such as the Maltego graph below. These graphs allowed for key character identification and information disclosures via OSINT such as the Swagger file. This type of auxiliary information can be extremely helpful in the competition for interacting with key characters or important systems.

The reports by this stage of the competition, being Finals, were honed in. I could tell many teams are now using well structure templates and treating the test like a second penetration test or retest. This is good because I put a big focus on overhauling the report rubric this year, and put together a few documents with guidance for our report graders. We had a wide variety of reports during the regionals, and the reports were weighted heavier in regionals as we had less interactions and no presentations. This means only stellar reports made it through to the Finals, which we def saw in the quality of reporting this year. We will be publishing all of the reports from Finals, again on our github, so be sure to keep an eye on it throughout the year. I will also be doing another post on my favorite reporting aspects this year, which can certainly give people a leg up with the grading.

This competition and the competitors never cease to amazing me. The Stanford team found another 0-day again this year, this time in ScadaBR. Below you can see that these are not super complicated memory corruption bugs, but rather high value bugs in the web applications. The bug below allows them to make unauthenticated requests and extract credentials from the application! I really like that these are usable bugs that grant the students further access. That said, we've made the decision to respect the researchers and obfuscate the bug until it is patched, but unfortunately ScadaBR is slow to respond and patch. The students will often take note of the infrastructure used in the regionals and research this infra before Finals. This competitive spirit is very inspiring to me, and is exactly the research advantage I write about in chapter 7 of my recent book. Either way, it's an incredible find for this year and certainly an amazing tactic in the competition as well.

As previously mentioned, I gave up leading the OSINT / World team this year to focus on scoring and a rebalance of the game. We wanted a heavier emphasis on in-character interactions this year for Finals. Once we had a rough idea on the interactions rubric we ran several models with different scoring combinations and weights. The goal was to give teams the ability to separate themselves more by differentiating their scoring. We also ran several "total" models with different team performance profiles, showing how teams could excel in any one area, yet a balanced score was still more valuable. Once we were happy with these models I spent considerable time writing documents to help guide graders, and then reviewing grades to make sure they fit the models. Since the regional events didn't include a presentation, only the report, we also decided to weight the presentation a bit heavier for Finals. Ultimately, I think these models helped us plan and create a better game.

This year heavy scanning would cause some of our more sensitive services to go down. So it made sense that we got a lot of help desk tickets with teams asking us to reboot or redeploy services. I manned our IT help desk during the Finals, trying to keep our ticket queue moving and ultimately clear. The key to success here was triaging the tickets and delegating them to available people on the appropriate team (apps, infra, world, or injects). I think we did a good job of responding to tickets fast, as we got some nice compliments on our response times during the closing ceremony. One thing I would do was investigate teams that took services down repeatedly or came to us too much. Some of these teams claim this wasn't their fault, and I would be forced to pull our logs or metrics that show otherwise. Teams that handled these events well or owned the mistake often did better on these interactions whereas teams that denied these issues obviously got worse scores. All this is to say, help desk tickets can lead to positive interactions, and if someone is going through the trouble of pulling logs or more information then you should try to work with them as best as possible.

There you go, that's a short recap of some of my highlights this year at CPTC. I should also mention that memes this year were absolutely fire. We are going to start a new CPTC dev team soon called the Meme Team just so we can clap back to some of these amazing memes. You can find the dev team's closing presentation here, which is equally laden with the memes. We also made some news again, but if you know any additional coverage please post it in the comments! Looking forward to next year, we will be publishing more guidance on the CPTC blog and our YouTube channel. We've already begun planning our scenario for the next year, and I'm already looking forward to another season in 2022! This post has been cross posted from lockboxx.